In October 2023, I did something that I never expected to do; I performed stand-up comedy.

I wrote six minutes of jokes from the perspective of a ‘philosopher from Mars’ who had just landed on Earth. I practised the set repeatedly in front of the mirror for weeks and then forced myself to deliver it in front of a crowd. Thankfully, they laughed.

The immediate feedback was incredibly positive; so much so that I performed at the Raw Comedy Heats in Auckland a few weeks later. I was honoured to stand on the stage at The Classic Comedy Club – a legendary New Zealand (Aotearoa) institution – and elicit chuckles from a room full of confused patrons who were not quite sure how to take the slightly unhinged ‘alien’ who had just landed.

Yet, as a person who prefers to ‘work behind the scenes’, standing up in front of a room full of people was such an anxiety-inducing experience that I felt that I needed to sleep for about three days afterwards. I’m happy on camera, but much more shy in front of a crowd. Performing as a character helped to remove the need to ‘expose’ myself so intimately to the audience, and made me feel less self-conscious about my perceived awkwardness, but it still felt like running a marathon. So, while I revelled in the exciting new process of joke writing and performing, I knew almost instantly that I wouldn’t enjoy being a full-time stand-up comedian. I loved the Martian alter-ego that I’d created (Kae Zar) and ‘writing funnies’ is a very enjoyable hobby…

…but how to channel this creative desire?

A fellow comedian mentioned the New Zealand Fringe Festival, and that I could create an online project. That way, I could keep writing jokes, develop my comedy ‘voice’ and only perform if and when I was ready. With a background in digital media, I could lean on my existing skills while learning new ones.

And so “Kae Zar’s from Mars” was born.

***

I sat down to write, and the script came to life over a series of afternoons and weekends (Thanks – in part – to this amazing free course from The University of East Anglia via FutureLearn). This included selecting a screenplay and analysing it. I chose NZ’s own Taika Waititi’s ‘JoJo Rabbit.’ I learned about Kurt Vonnegut’s ‘Story Shapes‘ and chose the ‘Man in Hole’ shape. As a writer with little previous fictional narrative experience, I also highly recommend John Yorke’s “Into The Woods” for a concise, yet comprehensive guide to story structure. Unfortunately, due to time constraints, I only ended up using around 1/3 of the story/script, but am currently expanding this to completion for future festivals.

Man in Hole: “The main character gets into trouble then gets out of it again and ends up better for the experience.” – Kurt Vonnegut.

***

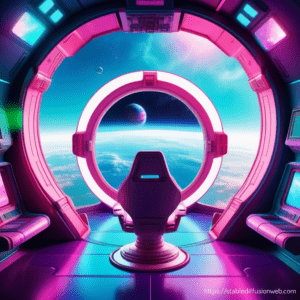

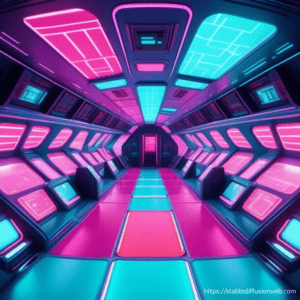

Imagining my Martian character travelling to Earth on their spacecraft, I used Stable Diffusion (Web) to ideate. Generative AI (Text-to-image AI) is still in its infancy, and the ethical debate around its use is still a hot-button topic that is unlikely to abate anytime soon. I am currently discussing this in the first-year undergraduate seminars as we speak (Hello to any of my students who may be reading!). Ethics aside, seeing the interiors of the spaceship that I envisioned in my head brought to life in a variety of visual ‘styles’ helped bring the story to life and was extremely helpful in writing my first narrative script.

***

Images generated with Stable Diffusion (Web) in ‘futuristic-vaporwave’ style (November 2023). Prompts (left to right): “view from inside of spaceship looking out into space to Earth with futuristic chair in centre”, “bed inside of space ship high angle” and “inside of empty space ship birds eye view”.

If you’d like to experiment further with Stable Diffusion, these resources were helpful:

Stable Diffusion prompt: a definitive guide;

80+ Best Stable Diffusion Styles You Should Definitely Try.

***

These AI-generated images first elicited the idea that I could use them as backgrounds, and ‘green screen’ myself into the foreground as Kae Zar the Martian. So, I began re-writing the script with this concept in mind. I researched affordable green screens and lighting on Amazon, but soon realised the challenges involved in doing this and the potential for it to look, well, a bit crap.

Green screen technology is wonderful but requires significant space and lighting setups to look even moderately professional and to effectively represent the world that I was writing about. And while I wasn’t going for Hollywood-level aesthetics (all of my favourite films fall into the ‘cult classic’ side of things), I wanted the film to be the best that I could make with the time and budget that I had. If I went with green screen, I just couldn’t see how I could make it look professional on a budget – so I kept thinking.

The ethical considerations around using AI-generated images also made me hesitate to use them at this stage. And while text-to-video is advancing quickly, it is still a series of still images stitched together. Plus, it looks weird. Or, at least, it looks like AI. I wanted the film to look realistic and – well – human.

***

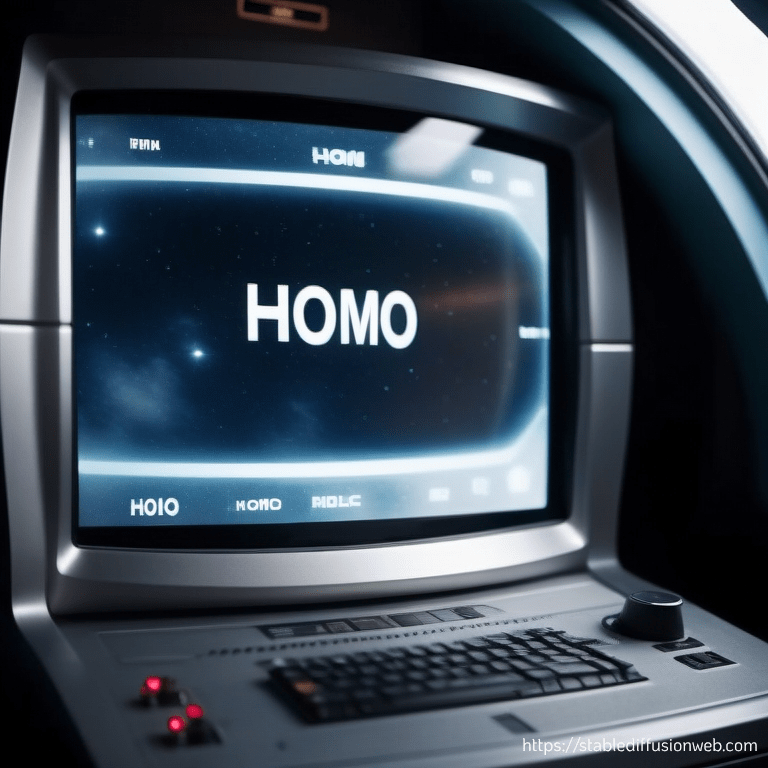

After further ideation, I came up with the idea of a piece of technology called ‘Homo’ that helped Kae Zar to understand the human race (Homo Sapiens). Heavily inspired by Douglas Adams’ Hitchiker’s Guide, perhaps the focus could be on the screen, with the set (AI-generated images) used peripherally?

Image generated with Stable Diffusion (Web) in ‘cinematic-default’ style (November 2023). Prompt: “a computer screen in a space ship that says HOMO”.

Then, my thinking pivoted to Creative Commons and royalty-free video footage. Perhaps, I could somehow use video instead of still images and this would ‘hide’ some of the less-than-perfect green screen? Maybe under some static/distortion, like a 90s documentary? Yet, as the idea to use video footage progressed, I realised that I could avoid the green screen (and the fear of putting myself in front of the camera) and use POV (Point-of-view footage) instead. By using POV (Point-of-view) footage, the viewer could participate in Homo’s ‘training’ and experience human interaction from the perspective of Kae Zar.

I began the search for royalty-free video footage at Pexels and Pixabay, two of the internet’s largest repositories of stock video, but I quickly realised that downloading each video before editing would take too much time (plus, did I mention I did this all on a 10-year old MacBook Air with limited storage?).

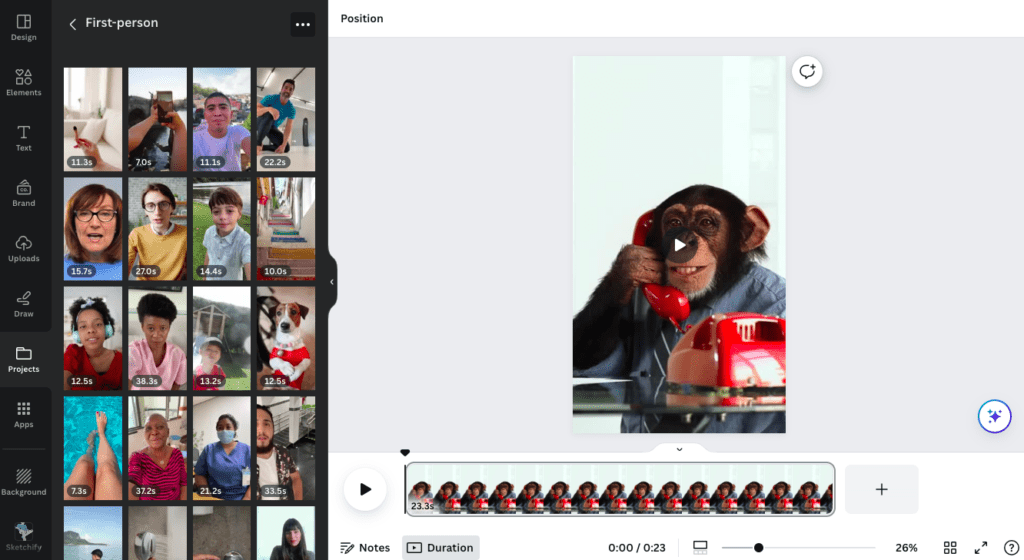

So, instead, I turned to Canva. I already had a Pro account that I used to create social media posts and designs, yet I’d never explored the video. As I began to search for terms such as “First Person Perspective”, “Personal Perspective”, “POV”, “Point of view”, “camera point of view” and “high angle view”, I began to see how much vertical/mobile footage was available.

That made me think: “Why don’t I make my short film for mobile?”.

***

Over a weekend, I saved every piece of relevant video footage on Canva in a new project called First-Person, amassing over 250 pieces of video. Using the ‘Mobile Video’ template, I put it together and illustrated the story that I’d written with this footage. Then, considered how to use the visuals to add an extra layer of humour to the text. So, for example, a joke about humans evolving from chimpanzees was illustrated with – you guessed it – video of a chimpanzee.

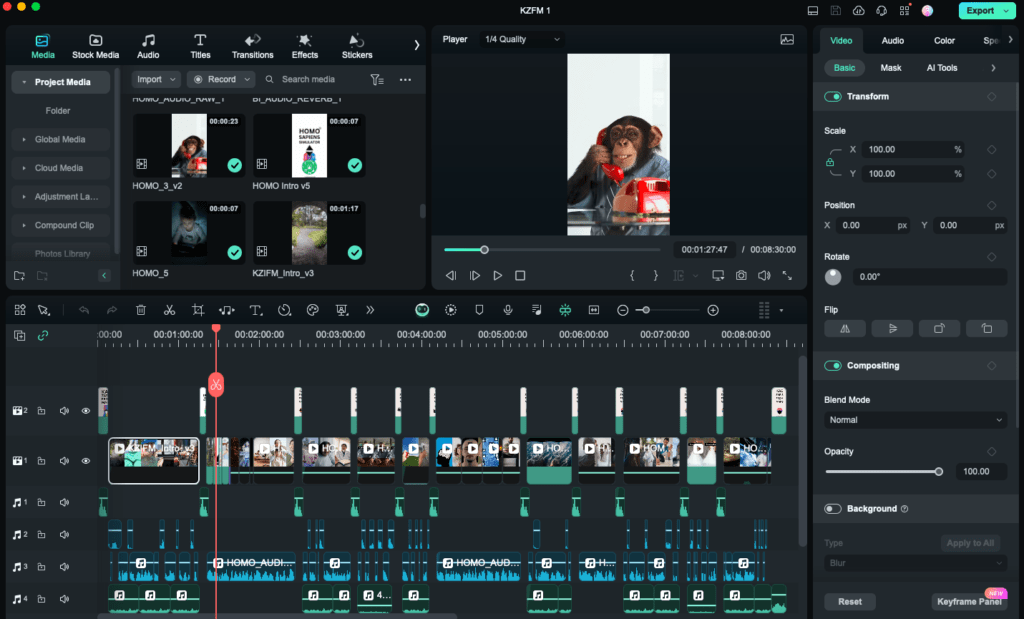

I read the dialogue out loud and mapped out the length of each joke, estimating the length of each section and piecing the video footage together using this as a guide. This process saved a lot of time downloading individual videos and was a very straightforward process. I created 1-minute sections in Canva (with ≈20 videos of 2-3 seconds spliced together), then exported them to Wondershare Filmora. There are several free and low-cost video editing programs out there, but Wondershare Filmora was well-reviewed for creating mobile-friendly/vertical video (9:16 aspect ratio). Once the Canva footage was exported, I edited it further in Wondershare Filmora, especially as once I’d recorded the audio, I made some slight changes that changed the timing by a few seconds.

I also chose the type of POV footage that I used in different ‘scenes’ throughout the film to differentiate the perspectives. When speaking with BI, the footage all occurs from the perspective of the active participant, i.e. you can see hands in the frame that could be your own, the perspective could be coming from your own eyes. Yet, when HOMO the software program is ‘running’ (speaking), the perspective becomes wider and video footage that is more typically cinematic and occurring from behind a camera is used. Conversation ‘simulations’ with humans are a single first-person perspective video.

***

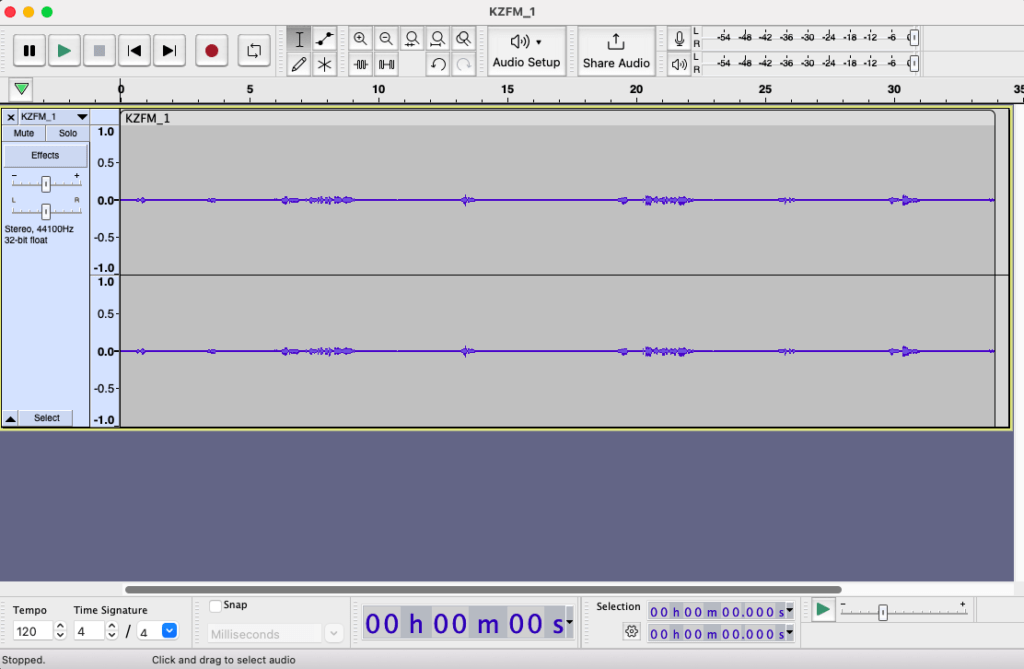

The next step involved the audio. I recorded my dialogue using Audacity, a free audio editor. I did little additional mixing of this audio in Audacity, except for some reverb to make the audio sound more like it was occurring from a distance (or ‘in space’).

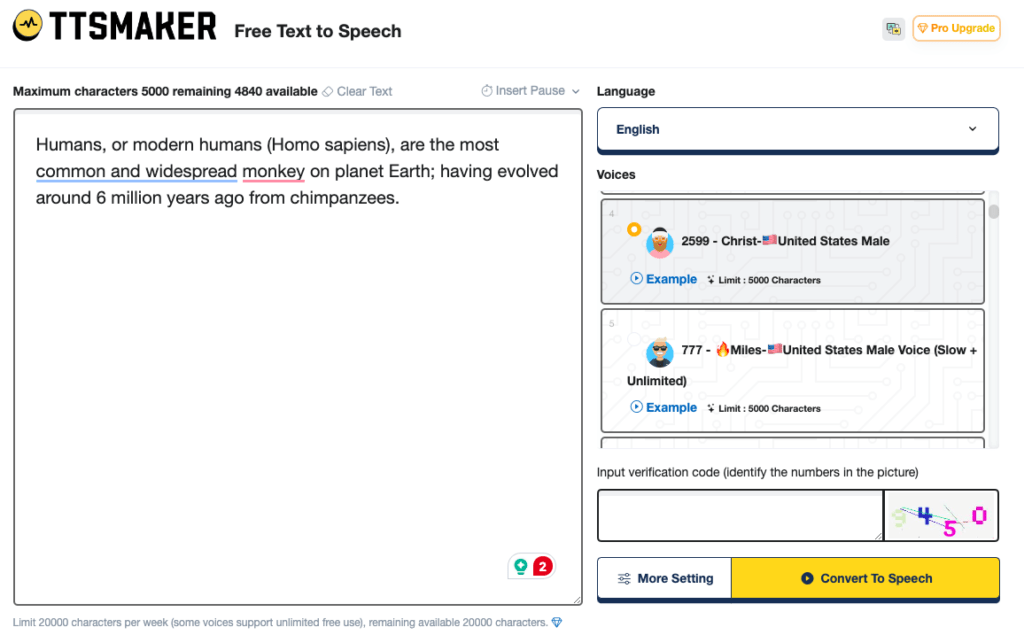

I then used TTSMaker, a free text-to-speech online tool to generate the voices of the other characters (BI, HOMO, HUMAN 1 and HUMAN 2). While this software is amazing (especially for a free program), I found that certain words were mispronounced and/or sounded “strange”. So, I re-wrote certain words and used synonyms that read more naturally by the text-to-speech tool.

AI voice credits:

BI: 780 Araceli – Global Female Voice.

HOMO: 2599 – Christ – United States Male.

HUMAN 1: 2402 – Robert – United Kingdom Male Voice.

HUMAN 2: 2541 – Lily – New Zealand Female Voice.

Again, I used reverb on the ‘BI’ voice, but this time a larger amount to create an additional sense of distance and otherworldliness.

Finally, I found free music and sound effects at Freesound.

Audio credits:

Short Silly Uke Song by qubodup | License: Attribution 4.0 (CC BY 4.0).

station deep ambience_BG.wav by DaveOfDefeat2248 | License: Attribution NonCommercial 4.0 (CC BY 4.0).

music elevator ext part 3/3 by Jay_You | License: Attribution 4.0 (CC BY 4.0).

Thunka wunka mysterion.wav by Calethos | License: Creative Commons 0 (CC0).

weird laugh.wav by Reitanna | License: Creative Commons 0 (CC0).

***

I then put all the components together in Wondershare Filmora, including additional ‘animations’ I created in Canva, multiple audio tracks, music and sound effects. This wasn’t my first short film, as I studied (and now teach) digital media at Deakin University, but it has been years since I made a film and had never taken on something so challenging. I certainly learned a lot through trial and error. For anyone who is attempting video editing for the first time, a few things:

- Start simple. This project was only around 8 minutes but was achievable for the first attempt at the concept. I learned a lot from the process and have plans to improve and build on this initial design.

- ‘Template’ as much as possible. I created one animated ‘card’ in Canva, then adjusted and duplicated these throughout the film. This saved time and provided a consistent tone/voice.

- Make notes of what you’re doing as you go. I noted how much reverb I put on the audio (where the audio came from etc.) and several times I was SO GRATEFUL for doing this as a backup would fail or I’d need to make a change and then forget what I’d originally done a few weeks earlier. Oh, and back up your work. OFTEN.

- Don’t expect perfection or professionalism on your first attempt. It’s impossible. You’re learning, and that’s fine!

Total cost:

Audacity – Free

TTSMaker – Free

Freesound – Free

Wondershare Filmora – Free*

(*Pro Lifetime Subscription ≈$106 (NZD)

Canva – Free

(Pro Yearly Subscription = $177 (NZD/Yr)*

*Note: I already had a subscription, but include the price for reference.

***

So, that’s how I created a New Zealand Fringe Festival short film with ≈$100, generative AI, royalty-free video (and a dash of Kurt Vonnegut). Is it perfect? Absolutely not! Will I likely cringe intensely looking back on it in a few years? Guaranteed! Am I proud of it? Yes! Taking an idea from inside your little coconut and putting it out into the world for other people to judge and criticise is terrifying, but it also opens up the possibility that you’ll connect with people who like it and that other (more talented and experienced) people can help you develop your idea.

If you’re thinking you need a lot of money to get your idea out there, you don’t. Just take the leap. You never know where you’ll end up.

I might even see you on Mars.

***

Want to watch the film for free? Click here.